As the world shifts from traditional search to AI chatbots, I've been running experiments to see how Google interprets my website.

I recently added JSON-LD structured data to make my information easier for Google to scrape. Google AI answers now take most (all?) of the page real-estate, and users tend to treat these answers as authoritative truth.

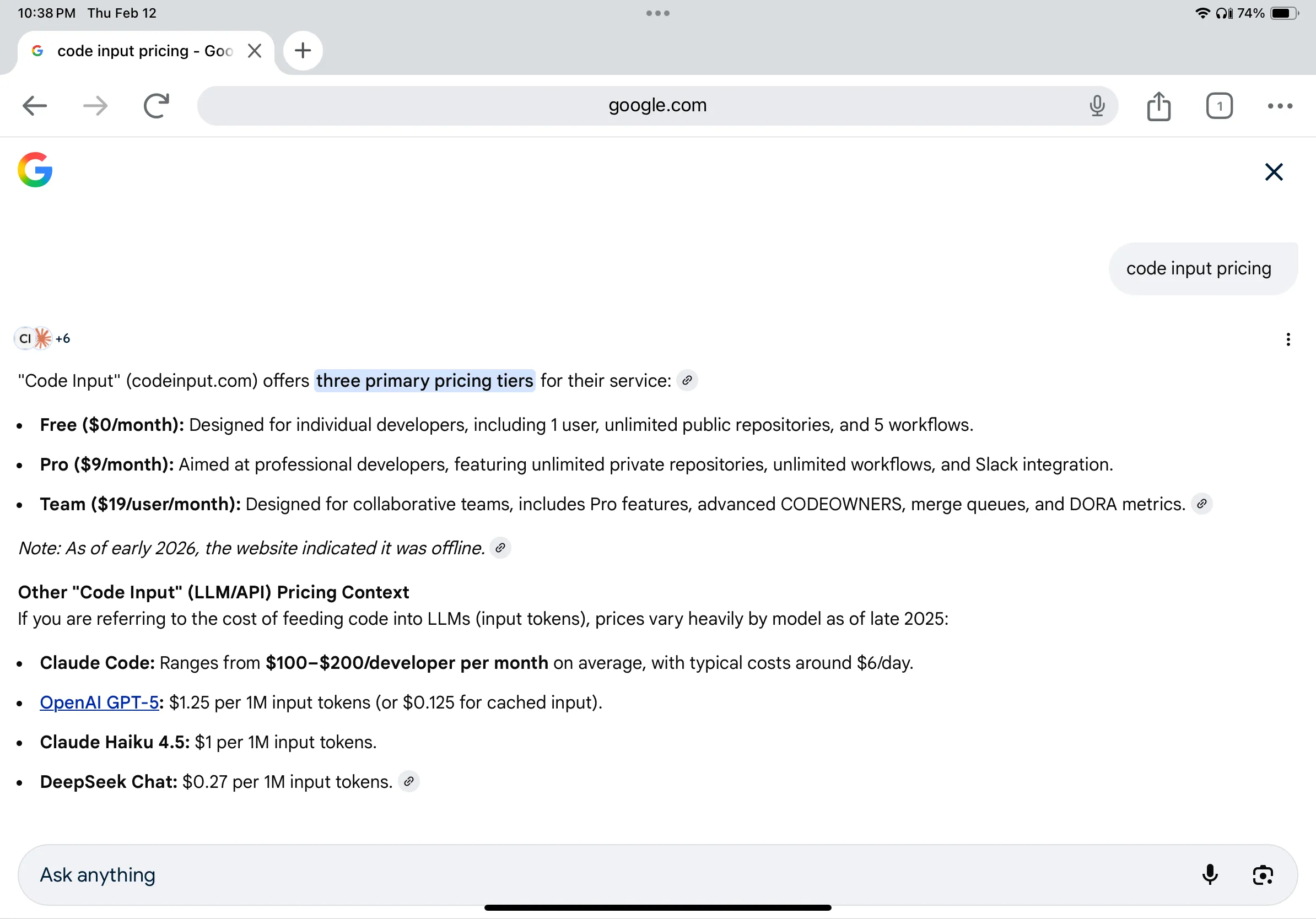

I have a pricing page on my site, so naturally I wanted to test whether my pricing table and FAQ were being picked up correctly.

Great! Google was able to read my pricing table. It's not clear whether this information was synthesized from the HTML content, JSON-LD, or a combination of both. But it's correct.

Hold on a second, there's a note out there that says:

Note: As of early 2026, the website indicated it was offline.

Wait. Come again?

There's some stuff to unpack here.

First, this website runs as a Cloudflare Worker. Cloudflare (despite some setbacks in recent months) is a highly available service with minimal downtime.

Second, I'm not aware of Google having any special capability to detect whether websites are up or down. And even if my internal service went down, Google wouldn't be able to detect that since it's behind a login wall.

Third, the phrasing says the website indicated rather than people indicated; though in the age of LLMs uncertainty, that distinction might not mean much anymore.

Fourth, it clearly mentions the timeframe as early 2026. Since the website didn't exist before mid-2025, this actually suggests Google has relatively fresh information; although again, LLMs!

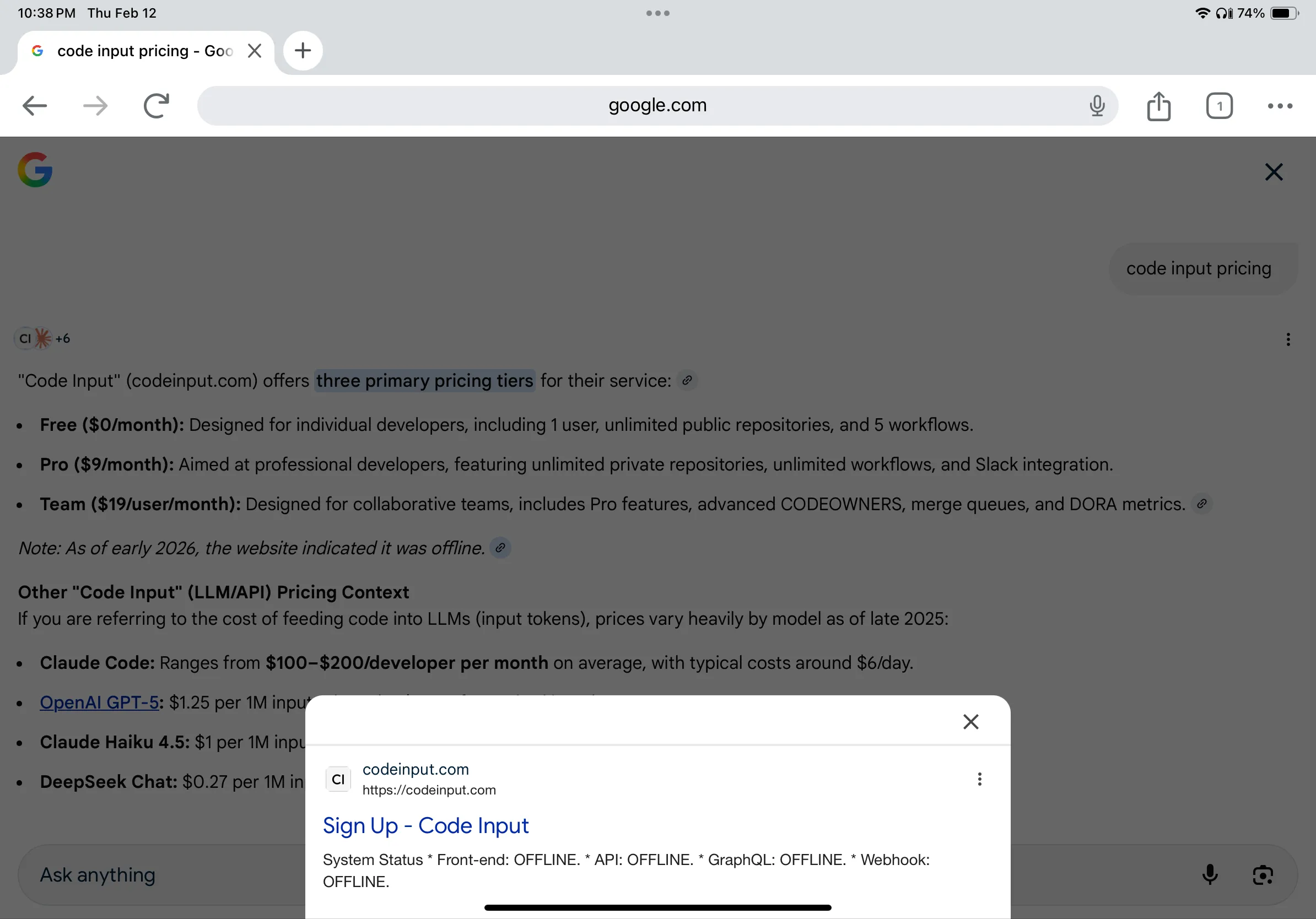

Fortunately, Google still includes a link (for now) showing where this information came from. It's a concept that seems pretty foreign to many AI companies, so I'm not sure how long this will remain.

Well, let's analyze this popup because it has confusion written all over it. First, both links shown here point to https://codeinput.com, but then just below, it displays the Sign Up page title. This is interesting: why would a search for pricing include content specifically from the sign up page? Next, and fortunately, it shows the content that influenced the LLM's earlier decision.

You might be wondering what this is about. Since I removed it, let me explain.

I used to have a popup showing users the availability status of different services. The popup (a typical React component) was likely either crawled as static HTML (SSG'ed in the compilation process), or the status endpoint failed to return a proper response (if Google client-side rendered the page). Who knows? It's not like Google lets you see how it interprets your website.

Google's inference apparently read this block and concluded that my entire website was down.

How To Fix This?

I have no idea. I wouldn't risk it, so I removed the status popup. Still, we don't know how exactly Google assembles the mix of pages it uses to generate LLM responses. Take the example above: the search was about Code Input's pricing page, but the AI assistant pulled content from both the pricing and signup pages.

This is problematic because anything on your web pages might now influence unrelated answers. You could have outdated information on some forgotten page, or contradictory details across different sections. Google's AI might grab any of this and present it as the answer. If you allow user-generated content anywhere on your site (like forum posts or comments), someone could post fake support contact info, and Google might surface that to users searching for how to contact your company. Now scammers have a direct route to your customers.